In case of Levenshtein alignment, there is also the option to give a plain file as the reference. To inspect the re-segmentation applied to the hypothesis you can use the align_hyp_to_ref.py tool (run python -m _hyp_to_ref -h for help). We encode the segmentation method (or lack thereof) in the metric name to explicitly distinguish the different resulting metric scores! In particular, we implement t-BLEU from Cherry et al. This works for all metrics (except for SubER itself which does not require re-segmentation). To use the time-alignment method instead, add a t- prefix. The AS- prefix terminology is taken from Matusov et al. To use the Levenshtein method add an AS- prefix to the metric name, e.g.: suber -H hypothesis.srt -R reference.srt -metrics AS-BLEU

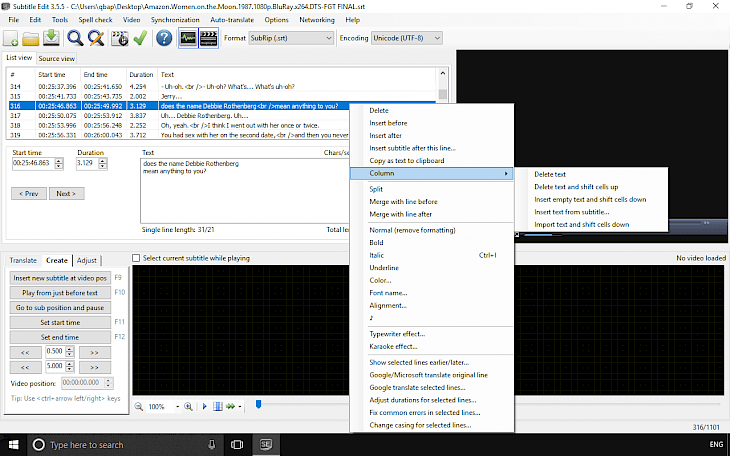

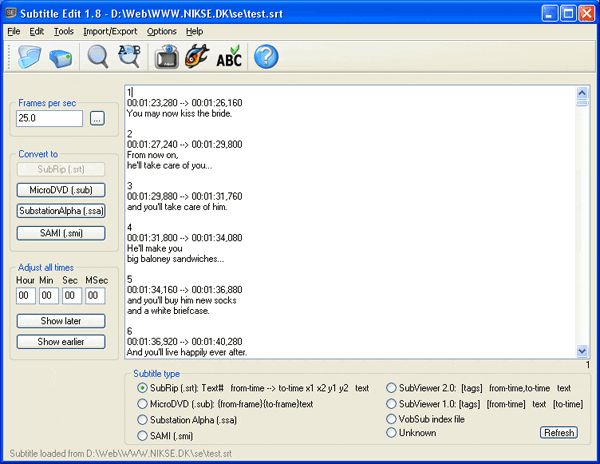

WER is computed with JiWER on normalized text (lower-cased, punctuation removed).Īssuming hypothesis.srt and reference.srt are parallel, i.e. The SubER tool supports computing the following other metrics directly on subtitle files:īLEU, TER and chrF calculations are done using SacreBLEU with default settings. Please do not report results using this variant as "SubER" unless explicitly mentioning the punctuation-/case-sensitivity. For this, add -metrics SubER-cased to the command above. We provide an implementation of a case-sensitive variant which also uses a tokenizer to take punctuation into account as separate tokens which you can use "at your own risk" or to reassess our findings. The main SubER metric is computed on normalized text, which means case-insensitive and without taking punctuation into account, as we observe higher correlation with human judgements and post-edit effort in this setting. All other formatting must be removed from the files before scoring for accurate results. Make sure that there is no constant time offset between the timestamps in hypothesis and reference as this will lead to incorrect scores.Īlso, note that, and formatting tags are ignored if present in the files. As a rough rule of thumb from our experience, a score lower than 20(%) is very good quality while a score above 40 to 50(%) is bad. As SubER is an edit rate, lower scores are better. The SubER score is printed to stdout in json format. Given a human reference subtitle file reference.srt and a hypothesis file hypothesis.srt (typically the output of an automatic subtitling system) the SubER score can be calculated by running: $ suber -H hypothesis.srt -R reference.srt Basic UsageĬurrently, we expect subtitle files to come in SubRip text (SRT) format.

#Subtitle edit deutsch install

Will install the suber command line tool.Īlternatively, check out this git repository and run the contained suber module with python -m suber. Installation pip install subtitle-edit-rate In addition to the SubER metric, this scoring tool calculates a wide range of established speech recognition and machine translation metrics (WER, BLEU, TER, chrF) directly on subtitle files. SubER is an automatic, reference-based, segmentation- and timing-aware edit distance metric to measure quality of subtitle files.įor a detailed description of the metric and a human post-editing evaluation we refer to our IWSLT 2022 paper.

0 kommentar(er)

0 kommentar(er)